Masked Autoencoders Are Scalable Vision Learners 链接到标题

* Authors: [[Kaiming He]], [[Xinlei Chen]], [[Saining Xie]], [[Yanghao Li]], [[Piotr Dollar]], [[Ross Girshick]]

初读印象 链接到标题

comment:: 提出了 CV 领域的 BERT, 通过预测随机 mask 掉的图片信息,获得良好的预训练效果。

文章骨架 链接到标题

创新点是什么 链接到标题

novelty:: 在 cv 领域提出类似于 BERT 的 denosize 生成式自监督方法

有什么意义 链接到标题

significance:: cv 自监督领域引入基于生成的范式(区别于 MoCo 这种基于对比学习的范式)

有什么潜力 链接到标题

potential:: 更小的算力得到更加强大的预训练模型

文章解读 链接到标题

Self-Supervised Learning 超详细解读 (六):MAE:通向 CV 大模型 - 知乎 (zhihu.com)

生成形式自监督在视觉领域应用的难点 链接到标题

为什么 2018 BERT 出来之后知道 2021 BEIT 和 MAE 这种类似 CV 的 BERT 才被提出来, Kaiming He 认为原因有 3 点:

- cv 和 nlp 主流框架不同, nlp 主要是 transformer 而 cv 在 ViT 之前主要是 CNN, CNN 关注 local 信息缺少 ViT 这种全局的 token 概念, 这类局部 mask 的方案在 CNN 上不适用。

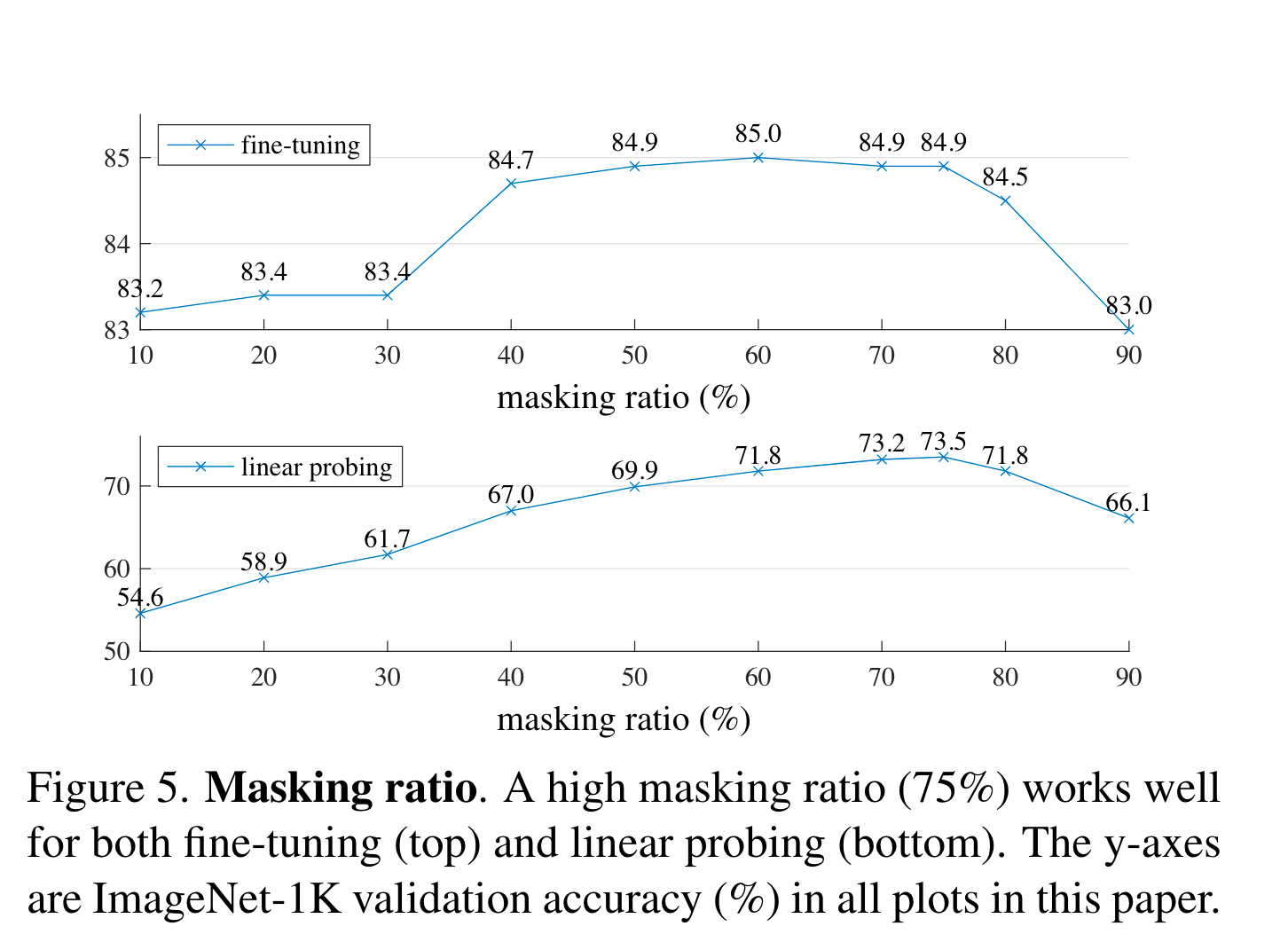

- 信息密度不同: 语言作为比图片更加抽象和 high level 的信息,遮挡图像的一部分很容易通过周围其他信息轻松预测到被遮挡的部分。作者的解决办法是增大遮挡的比例,例如到 90% 这时候被遮挡部分的预测就没有那么容易了。

- Decoder 部分在 cv 和 nlp 中预测语义级别不同: nlp 的 decoder 是预测 sentence words 这种高层语义信息而 cv 是重建 image pixels。

整体架构 链接到标题

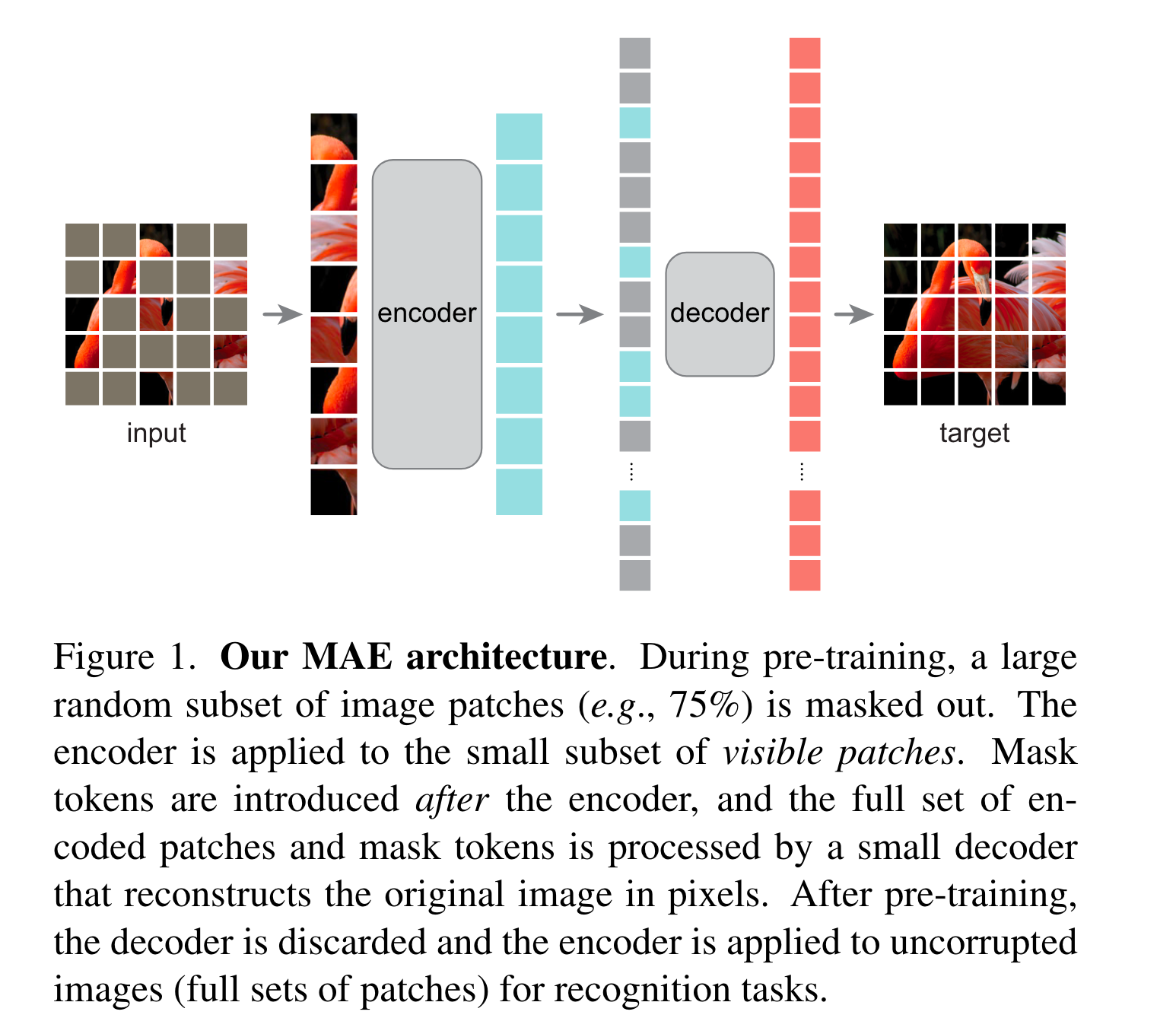

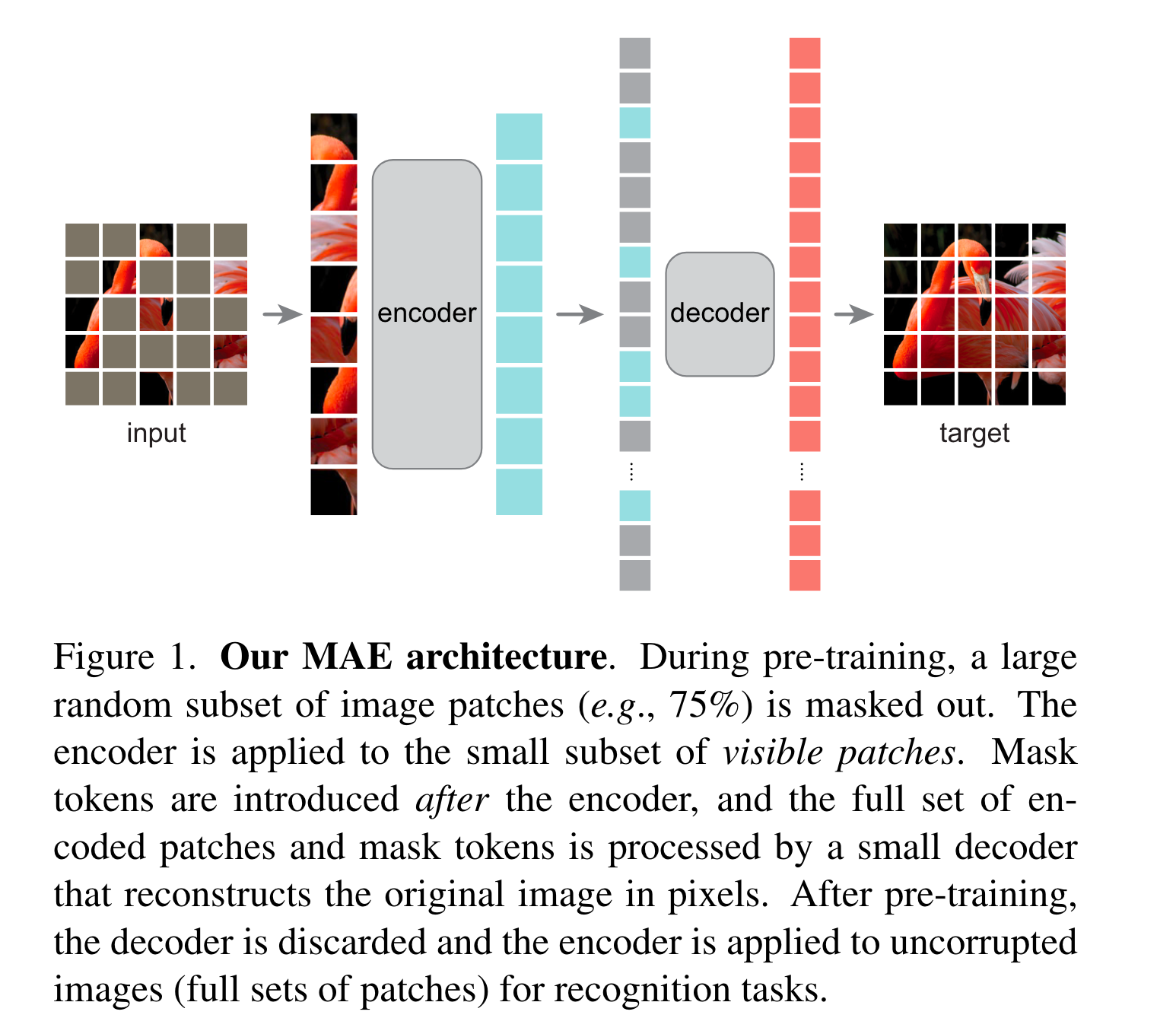

MAE 整体架构如上图所示, 它由两个非对称的 Encoder 和 Decoder 组成,具体处理过程是:

- 输入图像类似于 ViT 那样切 patch, 然后随机 shuffle 把后面的部分(比如75%)给 mask 掉;

- 把没有 mask 掉的 patches 通过 linear projection 加上位置编码送入 ViT 结构的 Encoder;

- Endcoder 的结果 deshuffle 一下再加上被 mask 的部分 patches (这里用共享参数的可学习向量表示) 结合位置编码给到 transfoer 结构的 Decoder 里

- Decoder 结果解码出图片

- 原图算 MSE loss。

实验结果 链接到标题

- ImageNet 上 ViT-L 结果

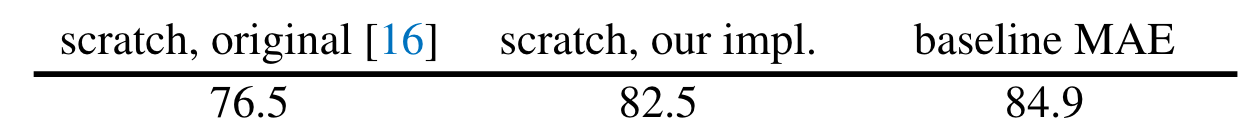

第一列是 ViT 原文结果, 第二列是 He 实现的,高了 6 个点, 主要是使用了较大的 weight_decay(0.3),第三列是 MAE 预训练后 ImageNet finetune 结果, 可以看到高了 2 个多点。

第一列是 ViT 原文结果, 第二列是 He 实现的,高了 6 个点, 主要是使用了较大的 weight_decay(0.3),第三列是 MAE 预训练后 ImageNet finetune 结果, 可以看到高了 2 个多点。 - 遮挡比例影响

最高的结果竟然在遮挡比例高达 75% 时出现, 而 BERT 典型 mask ratio 只有 15%

最高的结果竟然在遮挡比例高达 75% 时出现, 而 BERT 典型 mask ratio 只有 15%

References 链接到标题

- 10.1007/s11263-018-1140-0

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1109/CVPR46437.2021.01208

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1109/CVPR.2017.106

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1109/ICCV.2015.320

- This reference does not have DOI 😵

- 10.1109/CVPR46437.2021.01501

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1109/ICCV.2019.00612

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1109/CVPR.2006.100

- This reference does not have DOI 😵

- 10.1109/ICCV.2017.322

- [[@he2020]]

- 10.1109/ICCV48922.2021.00823

- 10.1109/CVPR.2016.90

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1145/1390156.1390294

- This reference does not have DOI 😵

- 10.1109/CVPR.2018.00914

- This reference does not have DOI 😵

- 10.1109/ICCV48922.2021.00091

- This reference does not have DOI 😵

- 10.1109/CVPR.2016.308

- This reference does not have DOI 😵

- 10.1007/BF00994018

- This reference does not have DOI 😵

- 10.1109/CVPRW50498.2020.00359

- 10.1109/CVPR.2009.5206848

- This reference does not have DOI 😵

- 10.1109/ICCV.2015.167

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1038/355161a0

- This reference does not have DOI 😵

- 10.1109/ICCV48922.2021.00951

- 10.1109/CVPR46437.2021.01549

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- 10.1109/ICCV48922.2021.00950

- 10.1109/CVPR.2016.278

- 10.1109/CVPR.2017.638

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

- This reference does not have DOI 😵

Currently 1 references inside library! @2023-01-11